R&D

OVERVIEW

At Cogent Labs, our goal is to build a preeminent team of engineers and researchers in the fields of AI and Deep Learning. Just as the machines of the industrial revolution multiplied the physical power of man by thousands, we believe that if applied to the right domains and problems, Deep Learning has the potential to multiply the cognitive and creative power of humanity by millions.

In order to leverage this opportunity, Cogent Labs aims to bridge the gap between abstract theoretical research and practical applications to real-world business needs. Besides fostering creative approaches to known problems, the large diversity of backgrounds as well as the open and collaborative working environment of our research team promotes the identification of entirely new tasks that can be solved by AI. Our team has competence in a wide range of domains within Deep Learning, and we are constantly pushing to expand on this.

If you are passionate about research and discovery and share our vision of fundamentally transforming the world using AI technology please get in touch.

Below is a short summary of some of our existing lines of research:

- Image Recognition

-

Vision is arguably the most important sense we employ to take in information from our environment and interact with the world. A disproportionate fraction of our brain’s processing power is dedicated to processing this constant stream of visual information. Yet, despite the apparent ease with which humans can make sense of even highly complex visual impressions, automating image analysis through machine learning has proven to be remarkably challenging until relatively recently.

Supervised image classification is a prime example of a task that has recently turned from being barely solvable by machines, to one where machines can reach super-human performance using a wide range of off-the-shelf algorithms. More challenging problems, such as 3D reconstruction, image segmentation, or handwriting recognition, are now just at the threshold of reaching a similar level of performance. This in turn will allow for even more complex downstream tasks. Some of our current research efforts include:

Handwriting Recognition

Cogent Labs' handwriting technology Tegaki is the first step towards developing a framework that can perform automatic extraction of the information from documents. While being a relatively simple task for humans, (offline) handwriting recognition provides several unique challenges to deep learning systems, such as having to segment images into sequences of unknown lengths. Low-resource languages such as Japanese, having previously received less commercial and academic attention, are still open research areas.

Hierarchical Document Understanding

We are developing systems for full hierarchical document understanding that can take in an entire document, segment it into its individual components such as text, figures and tables, and digitize them in an appropriate and useful way. Our research efforts are currently particularly focused on semi- and unsupervised learning approaches to achieve this segmentation and labeling of complex documents.

- Natural Language Processing

-

Developing a capability to analyze and understand natural language is one of the key capabilities required of any AI that is to be of use to humans and that can make sense of human generated data beyond simple number-crunching. Such an AI also allows users an intuitive and easily interpretable way to interact with it.

Traditional methods in Natural Language Processing (NLP) often employ highly simplified statistical approaches that treat texts as simple “bag-of-words” and compute average statistics on these, completely ignoring contextual information. Other methods rely on a large sets of hand-crafted linguistic rules and features that are completely rigid, time-consuming to define, and do not adapt to changes in the data. In recent years, Deep Learning has shown great potential in overcoming these issues, starting from simple word-embeddings that can improve on bag-of-words representations, to fully end-to-end architectures that can summarize large texts, perform sentiment analysis, answer questions posed in natural language, as well as many more language related tasks.

At Cogent Labs we are interested in the full spectrum of Deep Learning applications in NLP and committed to advancing the state of the art of AI language understanding capabilities. Some examples of our current research activities include:

Sequence-to-Sequence Learning

At the core of many deep learning based natural language systems is a sequence-to-sequence architecture that can compress text into an embedding vector, and then decompress the embedding into another text sequence. A wide range of tasks can be cast into this framework, and combined with for example attention mechanisms, these models form the backbone of many current systems performing neural translation, summarization, and many more.

Latent Variable Models

The text embedding vectors encountered in sequence-to-sequence models are not only useful as a feature in more complex downstream tasks such as summarization, but are also useful in their own right. We are particularly interested in latent variable models that allow for unsupervised tasks such as clustering and similarity analysis, as well as semi-supervised tasks such as automatic labeling and categorization, and sentiment analysis. Our research team’s unique background in statistics and various relevant branches of physics provide us with a unique view on variational approaches and high-dimensional embedding spaces.

Information Extraction

Another active area of research at Cogent Labs is the extraction of information from textual data. Despite the advances of deep learning, most approaches are still merely statistical models of language, lacking a true understanding of meaning and global context. Starting from simple entity extraction and leading up to automatic construction of knowledge graphs based on entire corpora of text, we believe that information extraction will play a key role in future advances in AI, not only assisting other NLP tasks such as allowing summarization that depends on global context, but also providing other systems direct access to a constantly growing knowledge database.

- Time-Series

-

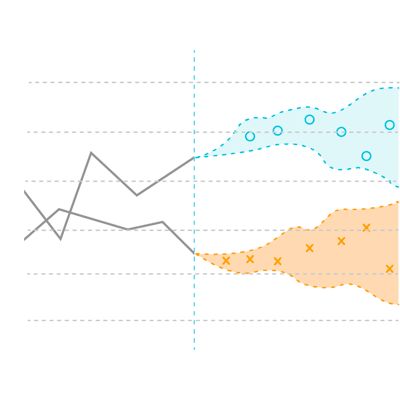

A vast number of real world processes generate data that can be cast as time-series. Thus, being able to analyze, understand and predict a given time-series, and potentially its underlying process, opens up an enormous range of potential applications. Examples of time-series based applications include time-sensitive event classification (medical diagnostics, speaker recognition), forecasting (financial market prediction), anomaly detection (industrial processes or machinery operation protection) and many others.

Time-series are characterized by often long-range temporal dependencies, which can cause two otherwise identical points in time to represent different classes or to predict different future states. This temporal correlation of data points generally increases the difficulty of the time-series analysis or prediction. A majority of current state-of-the-art techniques depended on hand-crafted features that usually require expert knowledge in the field and are expensive to develop. However, deep learning now allows for very flexible and data-driven design of adaptive models to deal with time-series-related problems.

At Cogent Labs, we focus on the development of deep learning techniques for time-series classification, forecasting and fault detection with a special emphasis on the end-to-end deep learning processing pipelines, allowing for fully data-driven and adaptive methods. We are currently pursuing the following:

Financial Time-Series Forecasting

Being able to automatically predict market trends and directions with high accuracy provides a tremendous advantage in many areas of finance. Cogent Labs’ research team is applying its extensive finance expertise and cutting-edge deep learning methodology to this domain. The power of deep learning enables us to effectively fuse and jointly analyze multimodal information streams such as stock prices, FX, and other real-time news feeds. Integrating a multitude of data feeds into unified, actionable predictions is one of our core research motifs.

PUBLICATION

Neural SDE - Information propagation through the lens of diffusion processes

Stefano Peluchetti, Stefano Favaro

Abstract: When the parameters are independently and identically distributed (initialized) neural networks exhibit undesirable properties that emerge as the number of layers increases. These issues include a vanishing dependency on the input and a concentration on restrictive families of functions including constant functions. We consider parameter distributions that shrink as the number of layers increases in order to recover well-behaved stochastic processes in the limit of infinite total depth. Doing so we establish the connection between infinitely deep residual networks and solutions to stochastic differential equations, i.e. diffusion processes. We show that these limiting processes do not suffer from the aforementioned issues and investigate their properties.

An empirical study of pretrained representations for few-shot classification

Tiago Ramalho, Thierry Sousbie, Stefano Peluchetti

Abstract: Recent algorithms with state-of-the-art few-shot classification results start their procedure by computing data features output by a large pretrained model. In this paper we systematically investigate which models provide the best representations for a few-shot image classification task when pretrained on the Imagenet dataset. We test their representations when used as the starting point for different few-shot classification algorithms. We observe that models trained on a supervised classification task have higher performance than models trained in an unsupervised manner even when transferred to out-of-distribution datasets. Models trained with adversarial robustness transfer better, while having slightly lower accuracy than supervised models.

Adaptive Posterior Learning: few-shot learning with a surprise-based memory module

Tiago Ramlho, Marta Garnelo

Abstract: The ability to generalize quickly from few observations is crucial for intelligent systems. In this paper we introduce APL, an algorithm that approximates probability distributions by remembering the most surprising observations it has encountered. These past observations are recalled from an external memory module and processed by a decoder network that can combine information from different memory slots to generalize beyond direct recall. We show this algorithm can perform as well as state of the art baselines on few-shot classification benchmarks with a smaller memory footprint. In addition, its memory compression allows it to scale to thousands of unknown labels. Finally, we introduce a meta-learning reasoning task which is more challenging than direct classification. In this setting, APL is able to generalize with fewer than one example per class via deductive reasoning.

PROFILES

ティエリ・ソスビ, Ph.D.

Thierry Sousbie, Ph.D.

プリンシパルリサーチサイエンティスト

グランゼコールであるエコール・ノルマル・シュペリウール・ドゥ・リヨンの天体物理学博士号保持。フランス国立科学研究センターと東京大学にて天文物理学の研究に携わる。研究者として、30以上の科学論文を出版、複数のオープンソースプロジェクトを経験。流体力学の並列数値シミュレーション、計算幾何学、 ディープラーニングに深い知識をもつ。

ステファノ・ペルケッティ, Ph.D.

Stefano Peluchetti, Ph.D.

プリンシパルリサーチサイエンティスト

モンテカルロ法に特化した、統計学、マシンラーニングの経験が豊富。LuaJITを用いたオープンソースフレームワークの単著者。HSBCにてデータサイエンティストとクオンツアナリストを経験。確率モデリングとブラックボックスの適応的手法に強い関心を持つ。ボッコーニ大学 統計学博士号保持。

デイビッド・マルキン, Ph.D.

David Malkin, Ph.D.

AI アーキテクト

ユニバーシティ カレッジ ロンドン 機械学習 博士号保持。遺伝的アルゴリズムを用いて非線形かつ高次元な動的解法の最適化に豊富な経験。その他機械学習を用いたトレーディングアルゴリズムの開発など、様々なフレームワークとネットワーク構築を使用したディープラーニングプロジェクトに関与。近年は非ユークリッドデータを用いた最先端の研究を行う。

リチャード・ハリス, Ph.D.

Richard Harris, Ph.D.

シニアマシンラーニングサイエンスマネージャー

ケンブリッジ大学にて純粋数学 博士号を取得し、シンプレクティックトポロジーを専門とする。これまで複数のスタートアップ企業において、クレジットカードの不正検知やパーソナライズ化サービスなどの開発に携わる。ソフトウェアエンジニア、データサイエンティスト、機械学習エンジニア、機械学習サイエンティストなど様々なポジションでの豊富な経験を有し、実用的な機械学習モデルの開発から実サービス化までのあらゆる側面において高い専門性を持つ。